BACKGROUND

Using evidence to guide clinical practice is widely recognized as the cornerstone of safe, effective, and patient-centred healthcare.1–3 A recent synthesis of a large body of literature related to implementing evidence in healthcare consistently demonstrated improved outcomes, such as length of stay and mortality, along with a positive return on investment (a metric used to evaluate the efficiency of an investment in healthcare).1 While the terminology of using evidence in clinical practice varies across the literature, and includes evidence-based practice (EBP), evidence-based medicine (EBM), evidence-informed decision-making, or evidence-informed practice (EIP), it is commonly conceptualized as the integration of the best available research evidence, clinical expertise, and patient preferences to optimize outcomes, promote resource stewardship, and uphold professional accountability.4–6

In respiratory therapy, as in many health professions, EIP is embedded within national competency frameworks as a professional expectation.7 Its inclusion signals that all RTs are expected to demonstrate the requisite knowledge, attitudes and behaviours to enact EIP at entry-to-practice. Yet how this competency is interpreted and enacted in practice remains unclear. A growing body of evidence indicates that respiratory therapists (RTs) encounter significant challenges in using evidence in practice. For example, Clark et al.8 assessed students’ and faculty’s perceived self-efficacy in EBP and found low mean scores in participants’ knowledge of EBP (e.g., literature searching, understanding statistical tests, and interpreting syntheses) as well as confidence to use evidence in practice. These findings are not unique and point to a range of barriers.8–17 These include heavy workload demands that restrict the time available for engaging in evidence, experiences of burnout, and systemic issues such as limited access to research resources or organizational support. They also reflect more profession-specific challenges, including feelings of inadequacy linked to how the profession is perceived, the underappreciation of RTs expertise, and limited opportunities for mentorship to build confidence and capacity.8–17 Collectively, these challenges highlight the need for more tailored educational interventions that both build individual skills and account for practice realities. Similarly, research across other health professions indicate that the enactment of EIP is often inconsistent and influenced by individual, contextual, organizational-level factors.18–23 These inconsistencies point to ongoing educational, structural and implementation challenges.

As part of a larger program of research, our team draws on foundational literature, established frameworks from other health professions, and input from knowledge users to conceptualize EIP in respiratory therapy. EIP is a process involving three interrelated components: reflective practice, as the ability to critically evaluate one’s decisions and actions to inform future care24,25; shared decision-making, working with people with lived experience to ensure that care decisions align with their values, needs, and preferences26,27; and research awareness, the skills needed to formulate clinical questions, locate relevant sources of evidence, determine its trustworthiness and usability.28,29 This framework served as a basis for assessing current gaps within the respiratory therapy profession.

Given the resource-intensive nature of developing and implementing educational programming, it is important to first identify which components of EIP warrant targeted interventions in the respiratory therapy profession. A clear understanding of the current knowledge, attitudes, and behaviours related to EIP is necessary to ensure that any future educational strategies (e.g., continuing education offerings, entry-to-practice curriculums) are relevant, feasible, and aligned with the learning needs and practice realities of RTs. Accordingly, the research question guiding this study was, what is the current state of knowledge, attitudes, and behaviours of RTs in Canada related to the core components of EIP?

METHODS

Study Design

We used a cross-sectional survey design to assess the knowledge, attitudes, and behaviours of RTs in Canada related to three core components of EIP: reflective practice, shared decision-making, and research awareness. To minimize response burden, each participant was randomly assigned to complete one of three surveys, each focused on a single EIP component.

To ensure our study addressed issues relevant to practice and professional priorities, we engaged a group of knowledge users as a steering committee throughout the project. Knowledge users are defined as individuals positioned to contribute expertise to knowledge production processes and/or who will influence, administer or be an active user of the research results in education, professional development, practice, or policy.30 In this study, we included the board of directors of the professional respiratory therapy association in Canada (the Canadian Society of Respiratory Therapists [CSRT]) as knowledge users, which included respiratory therapy leaders, educators, clinicians and students (n = 11). They provided advice on the study design, advised on the conceptual framework, and reviewed draft surveys for contextual relevance. This study is reported according to the Consensus-Based Checklist for Reporting of Survey Studies (CROSS).31

Participants and recruitment

We performed a sample size calculation using a 95% confidence level, a 5% margin of error, and a conservative population proportion estimate of 50% (p = 0.5) to ensure generalizability of the findings to the population of approximately 12,291 credentialed RTs in Canada.32 This yielded a minimum sample size requirement of 373 participants per component of EIP (total n = 1119).33 To enhance the likelihood of reaching the target sample size, we sent the invitation to twice the number of participants required based on the sample size calculation per survey (total n = 2238). This strategy was intended to account for anticipated nonresponse and improve the probability of achieving sufficient statistical power.34

We employed a simple-randomized, stratified sampling approach to recruit practicing RTs across Canada. Simple randomization ensured that each member of the population had an equal probability of selection, thereby minimizing selection bias. Stratification was based on province or territory to support proportional geographic representation. Eligible participants included credentialed RTs currently practicing in Canada. We excluded student RTs as the items in the surveys pertained to current practice.

Representatives from the CSRT and the Ordre Professionnel des Inhalothérapeutes du Québec (OPIQ) (the regulatory body for RTs in Québec), who were not affiliated with the research project, managed the randomization and distributed survey invitations to their members via email. In May 2025, the CSRT distributed survey invitations to the randomized RTs in all provinces and territories outside of Québec, followed by three reminder emails sent at two-week intervals. In June 2025, the OPIQ distributed the survey to randomized participants in Québec without any follow-up reminders, in accordance with their organizational protocol. Data collection for all surveys closed in July 2025. This two-organization process ensured linguistic and geographic inclusivity.

Finally, to collect the data, we administered the surveys through SurveyMonkey, which automatically assigned each participant a unique identifier to preserve anonymity. As an incentive, participants who voluntarily provided an email address after completing the full survey would be entered into a randomized draw for one of 30 CAD $100 gift cards or one of five conference registrations. These emails were collected separately from their survey responses. Prizes were allocated according to the stratified sampling strategy.

Instrument

We utilized three distinct surveys, each targeting one of the three components of EIP: reflective practice, shared decision-making and research awareness. The decision to focus on these three components was made following a meeting with the knowledge users, where we presented the rationale for the project and the conceptual framework of EIP. During the meeting, the knowledge users provided feedback and engaged in discussions with the research team, supporting the decision to measure all three components. We then determined that including all components of EIP in a single instrument would be too onerous for respondents. As a result, we opted to modify three thematically aligned but distinct surveys for our purpose and randomize respondents to complete one of the three to minimize response burden.

To investigate the reflective practice component, we adopted the Reflective Practice Questionnaire.35,36 This tool has been used across a range of health professions, including medical students, surgeons, nurses, and allied health professionals and assesses the degree to which individuals engage in critical evaluation of their clinical actions and experiences.35,36 The original questionnaire consists of nine subscales. For the purposes of this study, we selected seven subscales and mapped them to our target domains as follows: “self-appraisal” and “uncertainty” subscales were used to represent knowledge (6 items); “confidence-general,” “confidence-communication,” and “stress” were used to represent attitudes (12 items); and “reflection-in-action” and “reflection-on-action” were used to represent behaviours (8 items). We removed the subscales “job satisfaction” and “reflection with others” as they were not conceptually aligned with our framework. The 26 items are ranked on a 6-point Likert scale.

To investigate the shared decision-making component, we developed a composite survey by integrating items from multiple sources, as no single validated questionnaire captured the knowledge, attitudes, and behaviours comprehensively as determined by our literature review and confirmed in personal communications with researchers working in this area. While this approach was informed by theory and prior measures, it has not undergone full psychometric validation, and therefore, the survey should be considered preliminary. Knowledge was assessed using true/false items adapted from surveys developed by Yen et al.37 and Hoffman et al.,38 both of which were grounded in expert consensus and a literature review (14 items). Attitudes were measured using the 9-item IcanSDM scale by Giguère et al.,39 a recently developed instrument with emerging evidence of validity.40,41 These items were scored using a 0–10 visual analogue scale (8 items). Behaviours were assessed using items adapted from Hoffman et al.,38 based on the patient-oriented Shared Decision-Making Questionnaire (SDM-Q)42 and scored on a 6-point Likert scale (12 items). The resultant survey included 34 items.

To investigate the research awareness component, we adapted the Health Sciences–Evidence-Based Practice (HS-EBP) questionnaire as it was developed with input from diverse healthcare professionals, includes questions related to the practice context (often overlooked in other instruments) and demonstrates strong evidence of reliability and validity across various health professions.43,44 The original HS-EBP consists of five subscales; however, for the purposes of this study we used three of its subscales. The “results from scientific research” subscale was used to represent knowledge (14 items). The “beliefs and attitudes” subscale was used to represent attitudes (12 items), and the “development of professional practice” subscale was used to represent behaviours (10 items). We did not use the remaining two subscales, as they were not directly relevant to the domains under investigation. The 36 items are scored on a 10-point numeric scale.

Furthermore, each of our EIP component surveys also contained the same two open-ended questions: “In your opinion, what are the features of EIP?” and "What topics, training or learning activities would help you improve your ability to use EIP in your work?" Finally, each survey collected the same 12 sociodemographic variables: the respondents’ province or territory they’re currently working, self-identified gender, race,32 language, level of education, age, years worked in respiratory therapy, type of practice setting, geographic setting, public vs. private workplace, and employment status.

The research team and one knowledge user (representing the full steering committee) reviewed and revised the items on each component-specific survey to ensure relevance to the respiratory therapy context and contribute content validity evidence. After reviewing the items, we mounted the draft surveys onto SurveyMonkey and shared them with the broader knowledge user group to conduct a pilot test. We asked the knowledge users to complete the three surveys and provide comments on item clarity, structure, and relevance as part of a pilot phase. Of the 11 knowledge users invited, seven (63%) completed the pilot and submitted comments, which the research team reviewed and discussed.

Using the pilot test data, we conducted preliminary psychometric analyses for each survey. For the research awareness survey, Cronbach’s alpha was calculated for the entire scale and its subscales. Internal consistency was strong overall (α = 0.95), although one item showed a negative item-total correlation (–0.32). We retained this item due to its inclusion in previously validated versions of surveys and the small sample size of the pilot. The reflective practice survey demonstrated high internal consistency (α = 0.96) and values exceeding 0.90 across all subscales. For the shared decision-making survey, internal consistency was excellent, with alpha values of 0.90 for the attitude subscale and 0.97 for the behaviour subscale. For the knowledge subscale, which consisted of dichotomous true/false items, we used the Kuder-Richardson Formula 20 (KR-20) to assess the internal consistency reliability. The coefficient was 0.63, which is considered acceptable for an exploratory study.45 Following the pilot, all survey instruments underwent forward and back translation (English-French) following best practices to ensure linguistic and conceptual equivalence across languages.46 Supplemental File 1 includes the final English and French surveys.

Research ethics

This study was approved by the University of Ottawa’s Research Ethics Board (H-11-24-10931). Informed consent was obtained electronically, with participants required to review the consent document and agree before accessing the survey.

Data analysis

Survey responses were exported from SurveyMonkey and analyzed using IBM Statistical Package for the Social Sciences (SPSS) version 29.0 (SPSS Inc., Chicago, Illinois). We grouped participants based on the EIP component of the survey they received. Only surveys with at least 75% of the survey completed were included in the analysis.

Scoring procedures

We calculated subscale scores for knowledge, attitudes, and behaviours by computing the mean of all items within each domain for each survey. To enable meaningful comparison across surveys, we normalized the scores to a 0–100 scale. We assessed the internal consistency of each survey using Cronbach’s alpha.

Descriptive and comparative analyses

We used descriptive statistics (mean [M] and standard deviations [SD]) to summarize scores for each domain (knowledge, attitudes, behaviours) within the three components of EIP: reflective practice, shared decision-making, and research awareness. We conducted one-way analyses of variance (ANOVA) to assess differences between EIP components for each domain. When assumptions of normality or homogeneity of variance were violated, we used Kruskal-Wallis tests as non-parametric alternatives. We conducted post hoc comparisons using Tukey’s HSD test to examine pairwise differences. We adopted a significance level of α = 0.05 for all statistical tests.

To further contextualize these primary analyses and identify potential equity or practice-specific factors, we conducted exploratory analyses examining whether EIP domain scores varied by sociodemographic and practice-related characteristics. We selected specific variables (gender, race, education level, practice setting, geographic location, age, and years in practice) a priori based on previous literature suggesting their potential influence regarding using evidence as healthcare professionals.47–49 We conducted independent-samples t-tests to compare scores between two-level groups (e.g., gender: self-report woman compared to man/prefer not to answer), and Pearson’s correlations for continuous variables (age, years in practice). Given their exploratory nature, these analyses were intended to generate hypotheses for future empirical research and potentially identify patterns that may inform the design of targeted educational strategies.

Open-ended question analysis

We pooled the open-ended responses from the three surveys into a single dataset and analyzed using qualitative content analysis.50 To address instances where participants listed multiple features, we segmented responses into discrete meaning units and inductively coded these units, grouped into preliminary categories, and iteratively refined into broader themes. Each response from participants was then coded dichotomously for each theme (i.e., 1 = present, 0 = absent), allowing us to calculate frequencies and proportions of respondents who mentioned each feature.

RESULTS

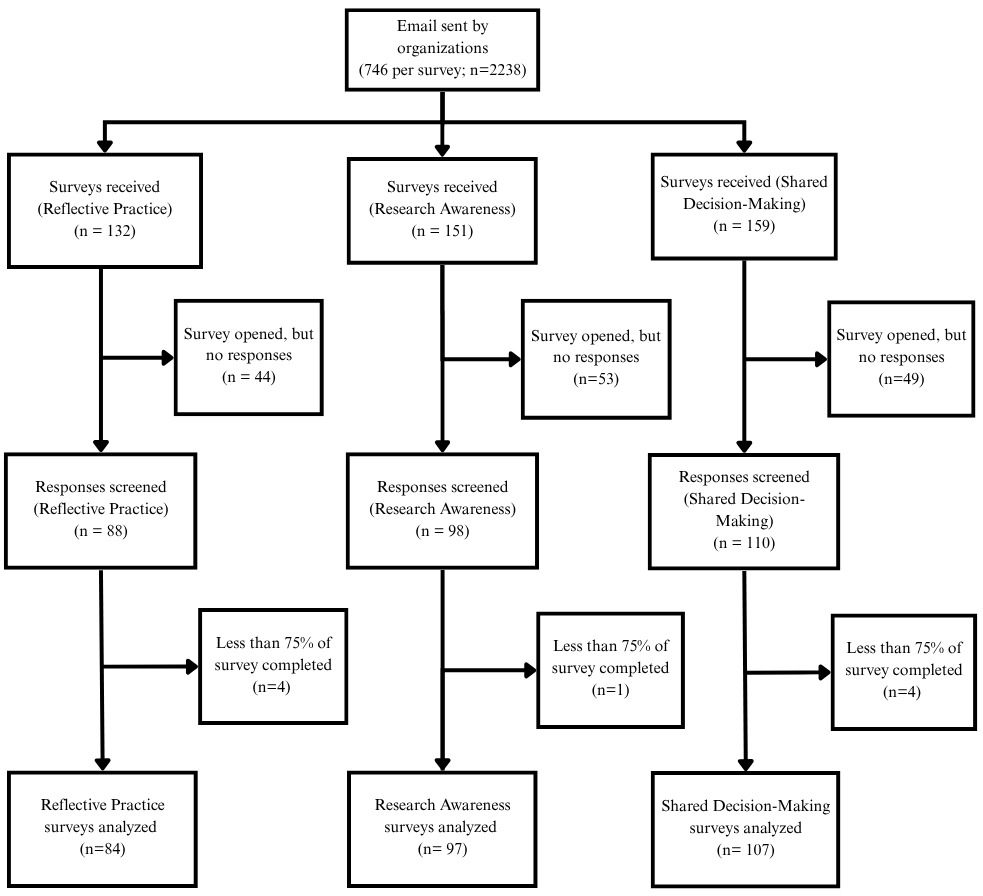

The three surveys were accessed 442 times. After removing non-responses and incomplete data, we analyzed the data of 288 participants (overall response rate = 10.2%). When examined per survey, 84 (11.3% response rate) completed the reflective practice component survey, 107 (14.3% response rate) completed the shared decision-making component survey, and 97 (13.0% response rate) completed the research awareness component survey (Figure 1).

Participant Characteristics

The groups showed comparable demographic characteristics, with no statistically significant differences in age, gender, race, or years of clinical experience (Table 1). Participants had a mean age of 42.4 years (SD = 10.8), and the majority self-identified as women (n = 216; 77.4%) and White (n = 214; 77%). Most held either an RT diploma (n =134; 48.0%) or held a diploma and a bachelor’s degree (n = 110; 39.4%) and had an average of 17.6 (SD = 16.0) years of experience. Participants were employed across various healthcare settings, with 44.8% working in tertiary care hospitals. Most participants reported working in urban areas (n = 215; 77.1%) and held full-time positions (n = 208; 74.6%).

Primary findings

Mean scores for knowledge, attitudes, and behaviours varied across the three components of EIP: reflective practice, shared decision-making, and research awareness (Table 2). Shared decision-making yielded the highest mean score for knowledge (M = 86.4, SD = 6.4), whereas research awareness had the highest scores for attitudes (M = 81.4, SD = 13.4) and behaviours (M = 78.3, SD = 10.4). In contrast, reflective practice consistently showed the lowest mean scores across all three domains.

One-way ANOVAs revealed statistically significant group differences for knowledge, attitudes, and behaviours (all p < .001) (Table 3). The effect sizes were large for knowledge (η² = .693) and attitudes (η² = .656), and moderate for behaviours (η² = .187). Post hoc analyses using Tukey’s HSD test confirmed significant pairwise differences across all three EIP components for each domain (Supplemental File 2).

Exploratory analyses

To further contextualize the primary findings, we explored whether knowledge, attitudes, and behaviours varied by sociodemographic and practice-related characteristics (Table 4). We identified several patterns. For reflective practice, women reported higher behaviour scores compared to men or those who preferred not to answer (M = 63.3 vs. 56.3, p = 0.04). RTs working in suburban settings scored higher than those in urban settings for both knowledge (M = 44.7 vs. 39.5; p = 0.03) and behaviours (M = 70.0 vs. 59.0; p = 0.004). Participants in non-academic settings demonstrated higher scores of attitudes (M = 55.8 vs. 49.9; p = 0.004) and behaviours (M = 64.2 vs. 57.2; p = 0.03) than those working in academic-affiliated institutions.

For shared decision-making, participants who identified as another race besides White reported higher behaviour scores than White participants (M = 76.8 vs. 67.5; p = 0.007). A weak negative association was also observed between age and attitudes, suggesting that older or more experienced RTs reported less positive attitudes toward shared decision-making (r = –.26; p = < .01 and r = –.22; p = < .05). Finally, we observed no group differences by gender, race, education, or practice setting. However, more years in practice were positively associated with knowledge (r = .22; p = < .05).

For research awareness, we observed no group differences according to gender, race, education level, or practice setting. We noted that a statistically significant positive correlation existed between participants’ years in practice and knowledge scores (r = .22, p < .05). Supplemental File 3 contains all the exploratory statistical results.

Open-ended responses

A total of 268 participants (93.1%) responded to the open-ended question, “What are the features of EIP?” This included 82 participants in the reflective practice group (97.6%), 99 in the shared decision-making group (92.5%), and 87 in the research awareness group (89.7%). We identified nine themes using qualitative content analysis. The most frequent related to research evidence, such as the use of scientific research, guidelines, and hierarchies of evidence as the foundation of practice (228 instances; 79.2%). This was followed by clinical expertise and professional responsibility, referring to clinicians’ knowledge, skills, and accountability in applying evidence (68 instances; 23.6%), and contextual factors, described as acknowledging the influence of healthcare settings, organizational structures, and broader system-level factors have in shaping EIP (49 instances; 17.0%).

Similarly, 251 participants (87.2%) responded to the open-ended question, “What topics, training, or learning activities would help you improve your ability to use EIP in your work?” Response rates by group were 78 in reflective practice (92.9%), 91 in shared decision-making (85.0%), and 81 in research awareness (83.5%). We identified seven themes. The most frequent related to condition- and content-specific application of evidence. Specifically, the desire for training on applying evidence to specific clinical conditions, contexts, or patient populations (85 instances; 29.5%). Participants also described their preferred learning formats, which included journal clubs, webinars, modules, and more (71 instances; 24.7%). Finally, participants had a desire to develop skills to better engage with research, such as understanding statistical tests and types of different methodologies. Specifically, training to build knowledge and technical skills in locating, interpreting, and appraising research evidence (61 instances; 21.2%). (Table 5).

DISCUSSION

The purpose of this study was to describe the current state of knowledge, attitudes, and behaviours of RTs in Canada related to three core components of EIP: reflective practice, shared decision-making and research awareness. Our results show that no component demonstrated consistently high scores across knowledge, attitudes, and behaviours. Even when one domain scored relatively high (for example, knowledge in shared decision-making), the related domains (i.e., attitude and behaviour) of the same component were comparatively low. While reflective practice had the lowest scores across knowledge, attitudes, and behaviours, scores in all three components indicated room for improvement, suggesting that all areas of EIP require strengthening in the respiratory therapy profession. Such variability in scores also suggests that focusing on individual components in isolation may be limiting. Although reflective practice, shared decision-making, and research awareness can be described separately for analytical purposes, in practice they are interdependent and mutually reinforcing. For instance, the ability to critically reflect on clinical decisions is influenced by a healthcare professionals’ awareness of relevant research evidence, and both are enriched when decisions are made collaboratively with patients.24,28,51 Treating the components as entirely separate risks overlooking these synergies and underestimating the complexity of how EIP is enacted in clinical contexts. Therefore, a more integrated approach may be necessary to guide both understanding and the development of educational strategies to equip RTs to enact EIP as a unified process. Participants’ open-ended responses echoed this view, often describing EIP as a relationship between of multiple components, reinforcing that teaching or learning the EIP components in isolation may not fully capture how EIP is enacted.

In the open-ended responses describing the features of EIP, participants placed a disproportionately high emphasis on research evidence (e.g., scientific studies, randomized clinical trials, clinical guidelines, meta-analyses, and hierarchies of evidence), which aligns with the high mean scores for research awareness attitudes in the results, suggesting that RTs in this sample place strong value on research as the central feature of EIP. In contrast, comparatively fewer participants described reflective practice or shared decision-making as central to EIP, which aligns with the lower quantitative scores observed for those components. This finding is not unexpected, as the respiratory therapy profession, like many others, has been influenced by the dominance of hierarchies of evidence and the privileging of population-level data.22,52–54 While this orientation highlights the respiratory therapy profession’s commitment to grounding care in research, it also suggests the importance of broadening what counts as evidence in practice. Supporting RTs to enact EIP may therefore require rebalancing the emphasis on traditional notions of evidence while reprioritizing and teaching the value of other sources of knowledge, including (but not limited to) individual patient experiences and shared decision-making, local and contextual data, Indigenous, experiential, and other ways of knowing.5,6,54–57

Participants’ open-ended responses about training needs further highlighted the importance of tailoring education to context. Most indicated a preference for learning opportunities directly relevant to their own practice environment (e.g., condition- or population-specific), suggesting that generic training in EIP may be insufficient. To develop robust training, educational programming should be designed to facilitate participants to incorporate their own context into learning (e.g., through case discussions, assessments, or presentations).58–61 Participants also identified a wide range of preferred learning formats (e.g., journal clubs, webinars, modules), which aligns with broader EBP literature on the importance of multifaceted, flexible and diverse delivery approaches.58,59 Taken together, these findings suggest that those involved in education (e.g., educators, professional development coordinators) may consider prioritizing practical, context-sensitive opportunities, such as embedding journal clubs into workplace routines, developing modular online content for self-paced learning, or offering case-based workshops on condition-specific applications. Ensuring flexibility in both content and format may help increase uptake and relevance for RTs working across varied settings.

We identified several exploratory trends that warrant future empirical investigation. For example, reflective practice scores appeared to differ by both geography and practice setting, with suburban RTs reporting higher scores than their urban counterparts, and those working in non-academic environments (e.g., rehabilitation centers, community hospitals, primary care) reporting more positive attitudes and stronger behaviours than those in academic settings. These findings raise important questions about how practice context shapes opportunities for reflection. Possible explanations include greater self-reliance in smaller or less resource-intensive environments, differences in caseloads, expectations related to teaching, availability in resources, or team dynamics (e.g., limited space and recognition for reflective practice).62,63 However, given the sample size and response rate, these results should be interpreted cautiously and considered hypothesis-generating rather than definitive. The influence of practice context on reflective practice and other factors among RTs represents an important area for future research.

We also identified variations related to shared decision-making. Notably, participants who identified as another race besides White reported higher behaviour scores than White participants. This trend may reflect the possibility that individuals from minoritized backgrounds place greater emphasis on inclusivity and patient involvement in clinical decision-making, perhaps informed by lived experiences of inequity or marginalization in healthcare contexts.64,65 In contrast, older participants reported less positive attitudes toward shared decision-making, suggesting a generational or cohort effect in these participants, given that shared decision-making represents a more recent paradigm in healthcare practice.51 Older professionals, who may have been trained in a more “biomedical model” care, could be less inclined to view shared decision-making as central to their professional role.66–68 Likewise, years in practice were associated with less positive attitudes toward shared decision-making, paralleling the findings related to age. This trend could reflect professional norms within healthcare over time, in which more experienced clinicians perceive shared decision-making as impractical or time-consuming in certain clinical settings, despite evidence suggesting otherwise.69 Together, these findings highlight the potential influence of demographic and experiential factors on RTs’ engagement with shared decision-making. More targeted research regarding how shared decision-making is defined and interpreted amongst RTs would be beneficial.

Overall, the findings reveal a consistent pattern in how RTs engage with EIP. Although RTs value research evidence and view it as central to their professional role, this emphasis often overshadows the reflective and shared decision-making dimensions of EIP. The lower scores in reflective practice and shared decision-making, along with the open-ended comments that privileged research evidence, suggest that EIP is often interpreted as applying external knowledge rather than as an integrated process that combines reflection, context, and patient partnership. Strengthening EIP in practice may therefore require rebalancing these dimensions so that reflective practice, shared decision-making and research awareness are treated as interdependent rather than distinct activities.

Strengths and limitations

This study has several strengths. We included a rigorous stratified random sampling strategy to minimize selection bias and obtain a more generalizable sample that better reflects the distribution of RTs across Canada. To measure the three core components of EIP, we adapted three thematically linked surveys with some evidence of validity that were endorsed in collaboration with knowledge users. Another strength was involving with knowledge users throughout the survey design process (e.g. conceptualization, instrument adaptation). Their feedback enhanced both the relevance and content validity of the surveys. Finally, a high proportion of participants who responded to the survey also provided open-ended responses, allowing for both quantitative and qualitative insights to the research question.

No study is without limitations. The surveys used in this study warrant consideration. For reflective practice and research awareness, we adapted the two measures from existing instruments, which had undergone psychometric testing in other health professional populations, which may limit their direct applicability to the respiratory therapy population. Similarly, the reflective practice survey was adapted by selecting and re-mapping subscales to align with our framework. Although this process enhanced conceptual fit, it may have affected validity and reduced comparability with other studies that used the original subscales. Furthermore, given the limited availability of comprehensive measures for shared decision-making, we developed a composite survey informed by theory and existing items. While we constructed each of these surveys according to best practices to ensure their content validity and internal consistency analyses provided preliminary evidence of reliability, each requires more extensive psychometric validation before broader use.

Another limitation is the low overall response rate, which may limit the generalizability of the findings. However, we compared our sample with national registry data and found that participants were broadly similar to the Canadian respiratory therapy population in terms of age and self-reported gender. For example, the proportion of women in our study (77.4%) closely matched national statistics.32,70–72 A separate study of Canadian RTs using convenience sample also showed similar patterns for race and employment characteristics.16 These comparisons provide some reassurance that our sample resembles the Canadian RT population with respect to age, gender, race and employment characteristics, but caution remains warranted when interpreting the findings. Furthermore, how the surveys were administered were not uniform across Canada. Participants in Québec received only one invitation to complete the survey due to local policy, whereas multiple reminders were used elsewhere. Finally, although we report several exploratory findings related to EIP among RTs, these should be interpreted with caution given the response rate and the exploratory nature of the analyses. Future focused empirical studies are needed to confirm the extent of these findings.

CONCLUSION

Using a simple-randomized, stratified sampling approach, we assessed the current state of the knowledge, attitudes, and behaviours of RTs in Canada related to three core components of EIP: reflective practice, shared decision-making, and research awareness. No component demonstrated consistently high scores, and reflective practice was consistently lowest across all domains. Participants emphasized research evidence as central to EIP, while patient and contextual factors were less frequently acknowledged, highlighting the need to broaden how evidence is understood and applied in practice. Preferences for training emphasized contextual relevance and flexible delivery formats, underscoring the importance of tailoring future educational strategies to RTs’ current practice. Our exploratory analyses indicate that certain sociodemographic and contextual factors may shape how RTs engage with EIP. Together, these findings reinforce the need to strengthen all dimensions of EIP in the respiratory therapy profession and provide a foundation for designing educational interventions and future research that support its enactment in practice.

Funding

MZ is supported by a Banting Postdoctoral Fellowship (#509780) from the Canadian Institutes of Health Research (CIHR), and IDG is a recipient of a CIHR Foundation Grant (FDN#143237).

Competing interests

All authors have completed the ICMJE uniform disclosure form. MZ is the deputy editor of the CJRT, and AJW is an associate editor of the CJRT. None were involved in any decision regarding this manuscript. IDG report no conflicts of interest.

Ethical Approval

This study was approved by the University of Ottawa’s Research Ethics Board (H-11-24-10931).

AI Statement

No generative AI or AI-assisted technology was used to generate this manuscript or its content.

Acknowledgement

We would like to thank Tim Ramsay, PhD for providing statistical advice throughout the project, the Canadian Society of Respiratory Therapists and the Ordre Professionnel des Inhalothérapeutes du Québec for facilitating recruitment, and Sébastien Tessier, RRT, MBA for providing feedback on the draft survey items. We would like to thank all participants for taking the time to respond to the survey.